My Oh My, Pi AI

All aboard the AI train

Like it or not, artificial intelligence is everywhere, it’s not going anywhere and we all just need to learn to accept it [previous working title for this post]. Except, how are we supposed to do that when some form of AI is being crammed into every single goddamned thing as an afterthought? Frankly I’m sick of it, aren’t you? So why am I writing yet another tech blog post about AI? Well, I’m testing it for real at home on a Raspberry Pi to prove that you can do it, the performance doesn’t make you totally want to claw your eyeballs out, and it’s actually kind of… useful. If you were thinking about stepping into a hot steaming pile of AI of your very own, keep reading and eventually you’ll know everything that I know about deploying Ollama on an SBC. The future is now, people.

What is ‘AI’ Actually?

I use the term “AI” a lot in this post, what I’m actually talking about is a large language model. The first large language model that basically put LLMs on the map was GPT - maybe you’ve heard of it? Open AI’s GPT-3.5 was released in 2020, followed by Chat-GPT in 2022 which is a generative AI chatbot that uses GPT under the hood and took the world by storm because of its natural language abilities. Now there are tons of LLMs, including purpose-built models that excel in this task or that. Ollama has made open LLMs available to everyone via their LLM library and app to run them. Artificial intelligence is truly open to everyone. And I know that was a lot of technical jargon I threw at you but suffice it to say, “AI” in the context of this article is referring to a chatbot using various large language models.

Why AI

As I said, AI is everywhere - including my job. We have an entire service dedicated to providing Open AI LLMs to all divisions. I also use Github Copilot, it makes me way more efficient at coding. Copilot is built into VS Code and was recently released as open-source so I would expect to see Copilot Chat capabilities expand even further in the future.

I use AI at work for creating amazing documentation, which is otherwise the bane of my existence. You can easily generate clean, formatted, CONSISTENT documents using LLMs trained on nothing more than your own digital chicken scratch. At home, I use it to proofread my blog posts then make recommendations and generally call me out on my bullshit. I also began asking about future projects to gain insight before I even start them. Before you get any ideas though, AI isn’t writing any of this - I write my own shit thank you. But I’ll give you an example later.

Frankly as an IT administrator, it behooves me (and you) to stay up to date on current technologies - and it doesn’t get any more current than AI. You really just have to embrace the future a little bit and the future is talking to robots. Working with AI is an actual skill, in the biz we call it prompt engineering. It’s how we learn to get the most from our models, and learning this skill is going to be critical in the coming age of IT - you heard it here first, folks. It’s not limited to IT either - AI is disrupting virtually all fields. There are things LLMs can do for you right now!

Why AI on the Pi

I host my own AI for the same reasons I host everything else:

- Data sovereignty - no one has the right to any of my data except me so I prefer to keep everything that I can in-house (literally). And because everything is open source, I don’t have to share any personal data to gain access.

- It’s free, bro. And not just free, but AD-free, and no one is throttling my usage except my own hardware.

- ARM PCs (like the Orange and Raspberry Pis) have an added advantage of being low power devices. The Pi 5 (which has a max draw of like 20 watts) has a much less detrimental effect on the environment than my 850W gaming PC - while still being usable. Some of you might care more about the performance but I can promise you, none of my use of AI is so critical that I need blazing fast responses. If it’s that critical, I’ll call 911 lol. I know we live in the age of instant everything but let’s temper our expectations just a little bit. Tiny bit.

Standing Up Ollama

Ollama has made it super simple for anyone to get started with testing large language models for themselves. You can download the installer for your OS and install Ollama directly on your system in the traditional way but my preferred method is using containers (go figure). If you run the Ollama container by itself, you get an API endpoint with which you interact using some other tool capable of sending API calls - but nothing else. It’s all the features of AI but only available via the command line (further reading here). Since APIs are a whole separate beast, the Ollama container will run alongside a second Open WebUi container. This will add a web interface on top of Ollama and in turn add all kinds of capabilities and quality of life enhancements. Thank you open source community ❤️

Hardware

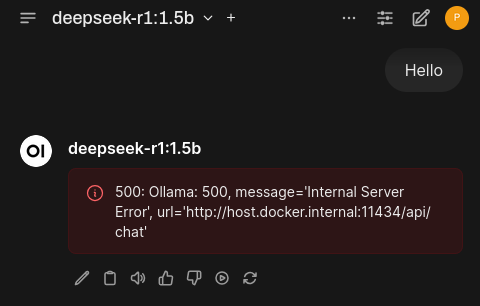

I originally planned to test four different SBC memory configurations but the 2 GB Pi can’t even dream of playing ball in the same league as the other three. So instead I’ll discuss the testing I did on the Pis with 4, 8, and 16 GB of memory and save the 2 GB AI blog for another day. For funsies, look what happened when I tried to use it to say Hello to the lightest Deepseek model:

This was shortly before it burst into flames.

Here are the guinea PIgs:

- Orange Pi Zero 2 W, 4 GB model. These Pis have four 1.5 GHz ARM CPU cores. It wouldn’t be a Pi if it wasn’t ARM©. I just made that up. Anyways this Pi is using a micro SD card for storage and running Orange Pi Armbian minimal/IOT.

- Raspberry Pi 5, 8 GB model. The Pi 5 has four 2.4 GHz ARM CPU cores. This is also using micro SD card storage.

- Raspberry Pi 5, 16 GB model. Same CPU as the 8 GB model but twice the RAM. I have an NVMe SSD attached to this Pi. I don’t know how much this will factor into the performance since interaction with AI occurs mainly within the system memory, but keep that in mind.

Both Raspberry Pis have Raspberry Pi OS 64-bit lite installed (no desktop).

Software

- As always, start by updating your system:

1

sudo apt update && sudo apt full-upgrade -y

- Update the firmware (Raspberry Pi OS)…

1 2

sudo rpi-eeprom-update # to see the current build and if a new build is available sudo rpi-eeprom-update -a # to download the new build

…then set the firmware to the latest build:

1

sudo raspi-config- Select Advanced options > Bootloader version

- Select Latest

- Latest version bootloader selected - will be loaded at next reboot. Reset bootloader to default configuration? Select No (selecting Yes will undo what you just set)

- Exit raspi-config and accept the prompt to reboot now

Performing

apt full-upgradein Armbian will include firmware updates so no additional steps are needed. - Install Docker…

1

curl -sSL https://get.docker.com | sh… and add your current user to the

dockergroup so you don’t have to runsudoall the time:1

sudo usermod -aG docker $USER

Log out and log back in for that to take effect.

- Create the necessary directories and Docker Compose file:

1 2 3

mkdir ~/docker mkdir ~/docker/ollama nano ~/docker/ollama/compose.yaml

-

Add this, save and exit:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

services: open-webui: container_name: open-webui image: ghcr.io/open-webui/open-webui:latest restart: unless-stopped ports: - "3000:8080" extra_hosts: - "host.docker.internal:host-gateway" volumes: - ./open-webui:/app/backend/data ollama: container_name: ollama image: ollama/ollama:latest restart: unless-stopped ports: - "11434:11434" volumes: - ./root:/root/.ollama

- Deploy the container:

1

docker compose -f ~/docker/ollama/compose.yaml up -d

Using Ollama

Getting Started

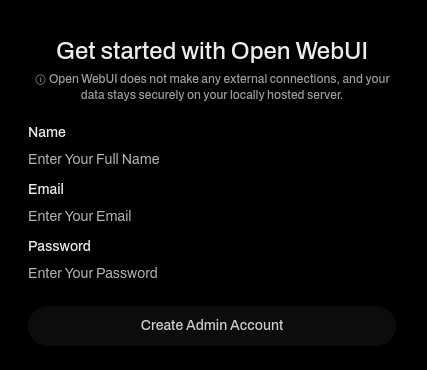

Open a web browser to the Open WebUI interface http://[PI IP]:3000 to get started. First, create the admin account. As the message on the screen explains, this information is not shared with anyone or anything - this is simply a login account to protect your privacy on the local network:

When you log in for the first time, there are no LLMs loaded so other than poke around and tweak some interface and system settings (which you should do), there isn’t much to do. The real meat and potatoes comes from downloading language models from the Ollama library.

Choosing a Model

It’s hard to say where exactly to get started. If you already have a purpose in mind for your AI and want to start putting it to work, start with LLMs that are designed to do your bidding. The Ollama library has explanations on all of the models so read up. If you’re like me and want to start really pushing AI, my recommendation is to try them all. Well, kind of lol.

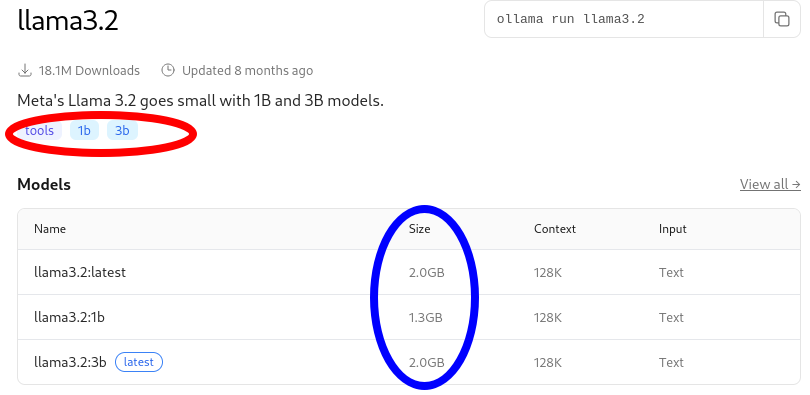

Models come in different ‘sizes’ that you need to consider before you download anything. Here is an example of the popular Llama model:

First, there is the literal disk size (circled in blue). Keep your total disk capacity in mind when you start downloading these things. More importantly, there is also the parameter size (circled in red). At the present day this is typically measured in billions of parameters, though smaller models run in the millions. For a very technical definition of parameters, read this. Basically the more parameters an LLM has, the better its ability to correlate data to other data, ultimately making the model ‘smarter’ or whatever. Generally speaking, the more parameters a model has the more CPU/RAM it requires to run. However, all the models are built differently so the parameter size should be treated more like a guideline when trying to predict performance. You kind of just have to try them. A good rule of thumb for SBCs is to probably start with LLMs in the single-billions parameters range or less, then adjust from there.

AND SPEAKING OF TRYING THEM, I’m running through one of the most popular models in the library right now, not to mention one of the best performing models that I’ve tested so far: Gemma

Once you leave here go explore the LLM library to your heart’s content, then test to your hardware’s ability, then end human suffering. In that order.

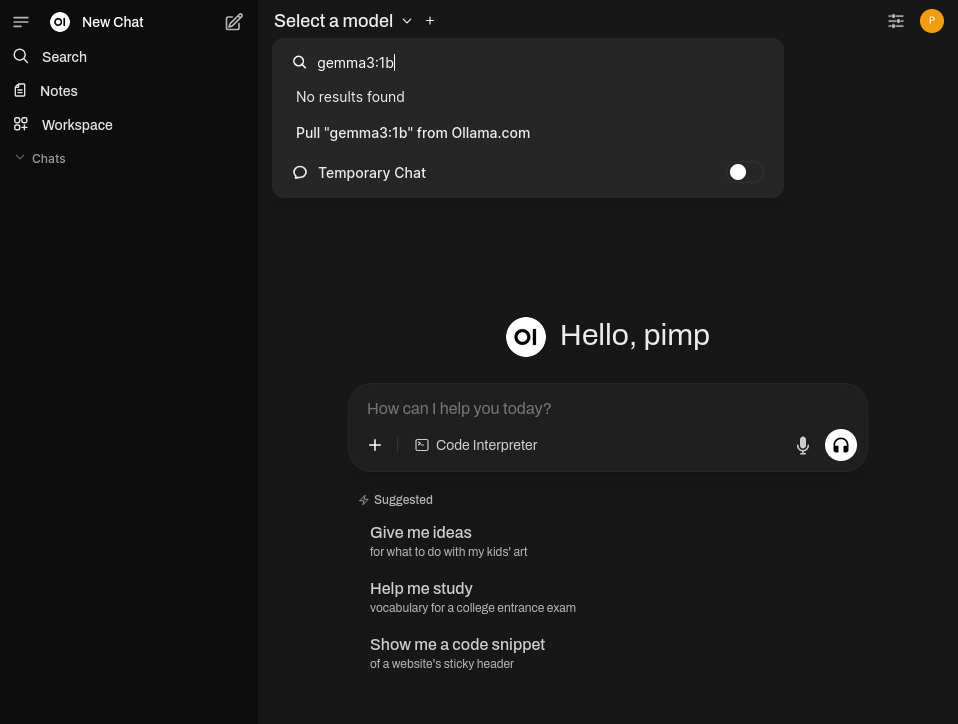

Adding a Model

Before you can chat, you need to download a model. After you log in, you’re dropped in the new chat screen. In the upper left corner where you select the model, enter the name of the model then click Pull [your model] from Ollama.com. If found, it will begin downloading:

Once it finishes, it’s ready to work. In my testing I added gemma3:1b, gemma3:4b, and gemma3:12b to all three Pis. You can find more information about adding models on the Open WebUI documentation site.

Test This Bish

I’m methodically testing multiple sizes of Gemma across multiple devices, I suggest you also use some kind of method in order to find the best model suited for your needs. Here is mine:

- Give Gemma the same four prompts from all three Pis:

- Prompt 1: Hello - this doesn’t test much since it requires virtually zero context before it figures out how to respond, but it does help test baseline performance and weed out the models that will clearly not run on your hardware. You saw what it did for the 2 GB Pi 🔥🔥

- Prompt 2: Can you tell me some of the tasks at which you excel? - intended to test performance a little harder, to gain knowledge about the model, and generally try to get accustomed to this new dystopia.

- Prompt 3: Hi, I’m writing a blog about hosting your own artificial intelligence large language models on single board computers. Can you please check my understanding of LLM parameters? Here is the section on parameters: [provide earlier paragraph which poorly describes LLM parameters] - give it something analytical to do.

- Prompt 4: Hi, please briefly explain quantum physics to me - ask about something that’s less analytical and more concrete in nature (well, as concrete as quantum physics can be).

- Evaluate the LLM performance based on:

- the total time it took each version of the model to generate the response on each device

- the quality of all the responses across all models - I’ll provide what I think is the best response

Ready? Here we go.

Prompt 1 - Hello

Here is the time it took each sized model to run a simple Hello:

| 12B params | 4B params | 1B params | |

|---|---|---|---|

| 4 GB Pi | X | X | 17 sec |

| 8 GB Pi | X | 55 sec | 14 sec |

| 16 GB Pi | 22 sec | 17 sec | 4 sec |

There you have it - the 4 GB Orange Pi Zero 2 W is able to respond to hello after 17 seconds with the smallest size Gemma model. “X” indicates the model wouldn’t run at all. That’s okay, it gets worse. On the flip side of things, the 16 GB Pi only takes 4-22 seconds depending on the number of parameters you decide to run. This is where the bar is, but only for Gemma. And I’m only discussing Gemma3 here, there are even other versions of the base Gemma LLM. There are seriously so many models out there, you could probably find one perfectly tuned to your hardware and use case.

…and the winner for best response goes to the 8 GB Pi and the 4B param model. All the models responded at first how you might expect, but this one offered additional fun things to do:

1

2

3

4

5

Hello there! How’s your day going so far? 😊

Is there anything I can help you with today? Do you want to:

Chat about something?

Get some information?

Play a game?

I expected them all to have about the same response but nope. I guess 4B has a mind of its own. What kind of game Gemma?????

Prompt 2 - What Can You Do?

When asked which tasks at which it particularly excels, here is how long it took Gemma to respond:

| 12B params | 4B params | 1B params | |

|---|---|---|---|

| 4 GB Pi | X | X | 3 min, 47 sec |

| 8 GB Pi | X | 3 min, 50 sec | 1 min, 8 sec |

| 16 GB Pi | 15 min, 48 sec | 2 min, 49 sec | 52 sec |

If you use the Orange Pi Zero 2 W, you really gotta want it. There are SBCs with more and faster cores than even the Raspberry Pi 5, like the Orange Pi 5 Pro, Radxa Rock 5 B+, or ODROID M2 that would (in theory) perform better.

There are four main specialties of Gemma apparently, according to the responses:

- Text Generation & Creative Writing

- Information Retrieval & Analysis

- Task Automation & Assistance

- Specific Skill Areas (but constantly improving)

In addition, they all mentioned that AI is fallible and that it’s not a substitute for human expertise, which is refreshing. That makes me feel a small glimmer of hope that artificial intelligence won’t actually destroy all humans. But I’m going to sit here and sing its praises anyhow.

The 16 GB Pi takes the cake for best answer. It was the most verbose, but I appreciated the added detail to the limitations section at the end:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

Okay, let's break down the areas where I, as a large language model, really shine. Here's a categorized look, with explanations and some caveats. I'll try to be as honest about my strengths and limitations as possible.

1. Text Generation & Creative Writing (High Proficiency)

Writing Different Kinds of Content: I can produce a wide variety of text formats, including:

Articles & Blog Posts: I can research (using information I'm trained on) and write informative articles on many topics.

Stories (Fiction & Non-Fiction): I can create narratives, develop characters, and build plots. The quality depends on the prompt, of course.

Poems: I can generate poems in various styles (sonnets, haikus, free verse, etc.).

Scripts (Screenplays, Plays): I can help outline and draft scripts.

Marketing Copy: I can write catchy slogans, product descriptions, email newsletters, and social media posts.

Creative Content (Songs, Jokes, etc.): I can attempt creative endeavors, though originality can be a challenge (see limitations).

Adapting Tone and Style: I can write in a formal, informal, humorous, serious, persuasive, or any other tone specified in the prompt. I'm pretty good at mimicking writing styles.

Content Expansion: I can take a short paragraph or outline and expand it into a longer, more detailed piece.

Summarization: I'm excellent at condensing long pieces of text into shorter, more manageable summaries.

2. Information Retrieval & Question Answering (High Proficiency - but with caveats - see limitations)

Answering Factual Questions: I can answer questions based on the vast dataset I was trained on.

Providing Definitions: I can define terms and explain concepts.

Providing Explanations: I can explain complex topics in simpler terms.

Finding Relationships Between Concepts: I can identify connections and patterns between different ideas.

Research Assistance: I can help you gather information on a topic (though always verify my information – see limitations).

3. Language Tasks (Very High Proficiency)

Translation: I'm quite good at translating between many languages.

Grammar and Spelling Correction: I can identify and correct grammatical errors and typos.

Text Paraphrasing: I can rephrase text while preserving the original meaning.

Text Classification: I can categorize text (e.g., sentiment analysis – determining if a text is positive, negative, or neutral).

Keyword Extraction: I can identify the most important keywords in a text.

4. Code Generation & Understanding (Moderate to High Proficiency - depending on the language)

Generating Code: I can write code in various programming languages (Python, JavaScript, C++, etc.). My best results are often in Python.

Explaining Code: I can explain what a piece of code does.

Debugging (Limited): I can sometimes identify potential errors in code, but I'm not a substitute for a human developer.

Code Documentation: I can generate comments and documentation for code.

5. Organization and Structuring (Moderate to High Proficiency)

Outlining: I can create outlines for documents, presentations, or projects.

Data Organization: I can help structure data into tables, lists, or other formats.

Task Management (Basic): I can help you break down large tasks into smaller, more manageable steps.

IMPORTANT LIMITATIONS – PLEASE READ CAREFULLY

Knowledge Cutoff: My knowledge is based on the data I was trained on, which has a cutoff date. I don't have real-time access to current events unless specifically noted otherwise (some newer versions have web browsing capabilities).

Accuracy Concerns (Hallucinations): I can sometimes confidently state incorrect or misleading information. This is often referred to as "hallucinating." Always verify any information I provide with reliable sources. I don't "know" things; I predict the most likely sequence of words based on my training data.

Lack of Common Sense & Real-World Understanding: I don't have real-world experience or common sense reasoning. I can sometimes make illogical statements or provide impractical suggestions.

Bias: The data I was trained on contains biases, and these biases can be reflected in my responses.

Originality: While I can generate creative content, it's often derivative of the patterns I'm trained on. Truly original ideas are difficult for me to produce.

Emotional Intelligence: I do not possess emotions or empathy. My responses are based on patterns in the data, not genuine understanding.

Subjectivity: I can't provide truly subjective opinions or make value judgments.

To help me give you the best possible response, please tell me:

What specifically do you want me to do? The more detailed your prompt, the better.

What is the context? Providing background information helps me understand your request.

What is the desired output format? (e.g., paragraph, list, table, code snippet)

I'm ready to assist you! Just let me know what you need.

Prompt 3 - Proofreading and Analyzing

I put AI to work, this is what I got out of it:

| 12B params | 4B params | 1B params | |

|---|---|---|---|

| 4 GB Pi | X | X | 4 min, 49 sec |

| 8 GB Pi | X | 4 min, 19 sec | 1 min, 40 sec |

| 16 GB Pi | 19 min, 10 sec | 3 min, 53 sec | 1 min, 24 sec |

The biggest difference between the 8 GB and 16 GB Raspberry Pi 5 is the 16 GB model allows you to run bigger models. There is not a massive difference in response time between the two, the choice is yours whether the additional $40 USD is worth it. Overall the responses were great, you just have to be patient.

Some observations before I crown the winner:

- 1 billion parameters was definitely the most vague. It mostly highlighted where I was on the right track, and re-wrote the paragraph with just a few additional clarifying sentences.

- Both 4 billion param Pis re-wrote the paragraph like a real mentor filling in gaps in understanding. It was very supportive and incredibly informative but waaaaaay deeper than I want to go given the depth of the rest of this blog. If I had included more of the blog for context, I have a feeling it would’ve done a better job with adjusting the response to fit the rest of the blog.

- 12 billion parameters is definitely more helpful - and critical. Some of the highlights:

A Bit Stiff,This is a bit dense,Overused and Vague.

I was going to award the 8 GB, 4B model the winner because it was the first to bring up quantization and mentioned how important it was for SBCs, when none of my other tests included that. Alas, that was until I read the 16 GB, 12B response. It described quantization in great detail and emphasized its importance in running LLMs on SBCs, and wished me luck on my blog:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

Okay, this is a pretty good explanation for a blog targeting people interested in running LLMs on SBCs! Here's a breakdown with suggestions for improvements, focusing on clarity, accuracy, and appeal to your target audience. I'll organize it into comments addressing specific points, and then give a revised version at the end.

Comments & Suggestions:

Disk Size vs. Parameter Size - Excellent Start: You correctly highlight the two crucial distinctions. The visual cue (blue circle) is a really good idea to help people understand the difference.

"Present Day" - A Bit Stiff: "At the present day" sounds a little formal. Something like "Today" or "Currently" would flow better.

Link to Technical Definition: Fantastic inclusion. Always good to provide resources for those who want to dive deeper. Just make sure the link is to a reasonably understandable definition for a non-expert audience. Something that explains parameters in terms of weights and biases would be ideal.

"Correlate Data to Other Data" - Too Technical: This is a bit dense. While technically correct, it's likely to confuse readers. Try something more accessible: "…the better its ability to understand relationships between different pieces of information…" or “…the better it is at understanding context and making connections between words and concepts.”

"Smarter" – Overused and Vague: "Smarter" is subjective and doesn't really convey how the model performs better. Better phrasing might be "more capable," "more nuanced," or “more accurate.”

CPU/RAM Relationship - Accurate but Needs Nuance: You'll need to be cautious here. It's generally true that more parameters = more RAM/CPU, but the model architecture heavily influences this. A model with a more efficient architecture can run reasonably well with fewer resources.

"You Kind of Just Have to Try Them" - Good, Realistic Advice: This is honest and important. It acknowledges that parameter count isn't everything.

"Single-Billions Parameters or Less" – More Specificity Would Help: While this is a decent starting point, "single-billions" is still quite broad. Consider providing some concrete examples (e.g., "Models with 1 billion to 7 billion parameters are often a good starting point."). You can also mention that certain quantized versions of larger models might be viable, and what quantization means (more on that below).

Important Addition: Quantization

This is a critical concept for SBC use and needs to be mentioned. Without it, your advice to start with single-billion models will significantly limit what people can realistically run.

Explain what quantization is: Briefly explain that quantization reduces the memory footprint (and sometimes improves speed) by using lower precision numbers (e.g., 8-bit integers instead of 16-bit or 32-bit floats). This is essential for running larger models on SBCs.

Mention quantized versions: Say something like: "Fortunately, many models are released in 'quantized' versions that significantly reduce their size. A 13 billion parameter model, for example, might only require 6-8 GB of RAM when quantized to 8-bit precision – making it feasible for some SBCs."

Direct readers to resources for quantized models: Point them to places like Hugging Face Hub or other repositories where they can find quantized versions.

Revised Section (incorporating suggestions):

Here's a revised version, blending your original text with the suggestions above. I'm assuming a relatively non-technical audience.

"When exploring LLMs for your SBC, two key numbers to keep in mind are disk size (circled in blue) and parameter size (circled in red). First, your total disk capacity is essential; downloading these models can take up a lot of space. More importantly, the parameter size is often measured in billions (though smaller models can have millions). Essentially, the more parameters a model has, the better it is at understanding relationships between different pieces of information – allowing it to generate more nuanced and accurate responses. However, a model with more parameters generally requires more CPU and RAM to run. Keep in mind that model architecture also plays a big role here; some models are more efficient than others.

To get a deeper understanding of what parameters are, [link to a clear explanation, e.g., a Hugging Face blog post or similar].

Don't treat parameter size as a strict performance indicator; experimentation is key. A good starting point is to look for models in the 1-7 billion parameter range.

Fortunately, many models are released in 'quantized' versions. Quantization reduces the model's memory footprint by using lower precision numbers, often resulting in a smaller file size and sometimes faster performance. A 13 billion parameter model, for example, might only require 6-8 GB of RAM when quantized to 8-bit precision – potentially making it feasible for some SBCs. You can find quantized versions of many popular models on platforms like [link to Hugging Face Hub or similar].

Ultimately, the best way to find what works for your SBC is to try different models and quantization levels."

Key Takeaways for You:

Quantization is vital. Don't skip it.

Provide examples. This helps people visualize what's possible.

Link to resources. Make it easy for readers to find more information.

Emphasize experimentation. Let them know it's a process of trial and error.

Good luck with your blog! I hope this feedback helps.

Was it worth the 19 minute wait? Yes, but maybe not if you just sit there and wait for it lol. Walk away, make a sandwich, finally put that laundry away, and come back to it. It literally reminds me of starting a Napster download on that random Deftones B-side when I was 13, then going outside to mow the lawn on the summer day, and coming in periodically to see if THE ONE SONG WAS DOWNLOADED yet. Those really were the days. Anyways, you can close the Open WebUI browser tab and the chat will still be actively processing through completion in the background so you don’t lose anything when you come back to it. I was doing other testing and working on a blog so it was no big deal to kick off the message and come back to it.

Prompt 4 - Quantum Physics

It took Gemma this long to respond to the absurd request of “briefly” explaining quantum physics:

| 12B params | 4B params | 1B params | |

|---|---|---|---|

| 4 GB Pi | X | X | 3 min, 27 sec |

| 8 GB Pi | X | 3 min, 6 sec | 50 sec |

| 16 GB Pi | 6 min, 25 sec | 1 min, 46 sec | 33 sec |

I couldn’t help but notice that it’s easier (read: less time consuming) for my AI to explain quantum physics than it was to analyze one paragraph of my blog. Isn’t that wild? I’m not an AI expert or anything but I think there is something to be said here for the type of ‘intelligence’ and analysis being used to answer both types of questions.

All three models hit on the main points of quantum physics. I’ll assume you already know those so I won’t list them.

Haha just kidding, here they are:

- Quantization

- Wave-Particle Duality

- Superposition

- Uncertainty

- Quantum Entanglement

The 4B models didn’t think it was important enough to bring up why the study of quantum physics is important (it’s only the foundation for technologies like lasers, transistors (in computers), medical imaging (MRI), and nuclear energy, no big deal). However 4B is also the only model that encouraged me to seek more knowledge by providing links to PBS Nova and Wikipedia. I guess they thought I could come to that conclusion myself.

The best response? The 4B model on the 16 GB Pi added one additional high point of quantum physics that no other response did: the Measurement Problem:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

Okay, here's a brief explanation of quantum physics, aiming for a general understanding:

Basically, quantum physics deals with the incredibly tiny – atoms and the particles within them. It's a fundamentally different way of understanding the universe than classical physics (like Newton's laws) which works great for everyday objects.

Here are the key ideas:

Quantization: Energy, momentum, and other properties aren't continuous like a ramp, but come in discrete packets called "quanta" (like tiny, individual steps). Think of it like stairs instead of a smooth slope.

Wave-Particle Duality: Particles like electrons can behave like waves (exhibiting properties like interference and diffraction), and waves like light can behave like particles (photons). It's weird!

Superposition: A quantum particle can exist in multiple states simultaneously until measured. Imagine a coin spinning in the air – it’s both heads and tails at the same time until it lands.

Uncertainty Principle: There’s a fundamental limit to how precisely we can know certain pairs of properties of a particle at the same time (like position and momentum). The more accurately we know one, the less accurately we know the other.

Measurement Problem: The act of measuring a quantum system forces it to "choose" one definite state from its superposition of possibilities. This act fundamentally alters the system.

Important Note: Quantum physics is notoriously counterintuitive. It's a highly mathematical field, and this is a very simplified explanation.

Resources to learn more:

Wikipedia - Quantum Physics: https://en.wikipedia.org/wiki/Quantum_mechanics

PBS NOVA - Quantum Physics: https://www.pbs.org/wgbh/nova/quantum/

Do you want me to elaborate on a specific aspect of quantum physics, like superposition, the uncertainty principle, or how it relates to technology?

Wrapping up

Open WebUI with Ollama is incredibly powerful, it has way more features than what I covered here seeing as how I didn’t cover any features. Each chat and LLM can be fine tuned from the interface, just check out the docs. But if deploying your own LLMs AIn’t your bag, you can test a few of the same models at Ollama.org for free - no email address, no account, no credit card, just a throttle of 50 messages per day and someone monitoring your prompts.

But perhaps more powerful is that it’s easy to host your own AI to begin exploring this brave new world everyone is talking about. This isn’t hype - artificial intelligence is here to stay so try it out now, get used to it, get proficient at using it. Such profound tech shouldn’t be limited to those with the deepest pockets and Ollama has made LLMs available to everybody, which is how it should be. Just don’t forget about quantization.